With thanks to Micah Allen (@neuroconscience) for pointing this one out.

My day job is as an fMRI (functional magnetic resonance imaging) researcher, so you can imagine how tickled I was when I came across a brand-new neurobollocks-peddler who’s chosen to set up shop right on my patch!

Donald H. Marks is a New Jersey doctor who in 2013 set up a company called ‘Millennium Magnetic Technologies’. Readers old enough to remember Geocities sites from the mid-90s will probably derive some pleasant nostalgia from visiting the MMT website, which is refreshingly unencumbered by anything so prosaic as CSS. Anyway, MMT offer a range of services, under the umbrella of “disruptive patented specialty neuro imaging and testing services”. These include the “objective” documentation of pain, forensic interrogation using fMRI, and (most intriguingly) thought and dream recording.

This last one is something that’s expanded on at some length in a breathlessly uncritical article in the hallowed pages of International Business Times (no, me neither). According to the article:

“The recording and storing of thoughts, dreams and memories for future playback – either on a screen or through reliving them in the mind itself – is now being offered as a service by a US-based neurotechnology startup.

Millenium Magnetic Technologies (MMT) is the first company to commercialise the recording of resting state magnetic resonance imaging (MRI) scans, offering clients real-time stream of consciousness and dream recording.”

And he does this using his patented (of course) ‘cognitive engram technology’, and all for the low, low price of $2000 per session.

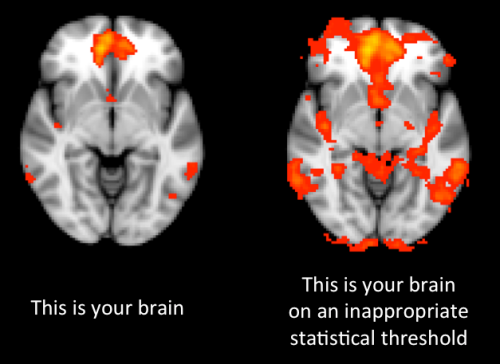

It’s clear from the article and the MMT website that he’s using some kind of MVPA (multi-voxel pattern analysis) technique with the fMRI data. This technique first came up about 10 years ago, and is based on machine learning algorithms. Briefly, an algorithm is trained, and ‘learns’ to distinguish differences in a set of fMRI data. The algorithm is then tested with a new set of data to see if what it learned can generalise. If the two sets of data contain the same features (e.g.the participant was exposed to the same stimulus in both scans) the algorithm will identify bits of the brain that contain a consistent response. The logic is that if a brain area consistently shows the same pattern of response to a stimulus, that area must be involved in representing some aspect of that stimulus. This family of techniques has turned out to be very useful in lots of ways, but one of the most interesting applications has been in so-called ‘brain-reading’ studies. In a sense, the decoding of the test data makes predictions about the mental state of the participant; it tries to predict what stimulus they were experiencing at the time of the scan. A relatively accessible introduction to these kinds of studies can be found here.

So, the good Dr Marks (who, by the way, has but a single paper using fMRI to his name on Pubmed) is using this technology to read people’s minds. However, needless to say, there are several issues with this. Firstly, to generate even a vaguely accurate solution, these algorithms generally need a great deal of data. The dream decoding study that MMT link to on their website (commentary, original paper) required participants to sleep in the MRI scanner in three-hour blocks, on between seven and ten occasions. Even after all that, the accuracy of the predictive decoding (distinguishing between two pairs of different stimuli, e.g. people vs. buildings) was only between 55 and 60%. Statistically significant, but not terribly impressive, given that the chance level was 50%.

My point here is not to denigrate this particular study (which is honestly, a pretty amazing achievement), it’s to make the point that this technology is not even close to being a practical commercial proposition. These methods are improving all the time, but they’re still a long way from being reliable, convenient, or robust enough to be a true sci-fi style general-purpose mind-reading technology.

This apparently doesn’t bother Dr Marks though. He’s charging people $2000 a session to have their thoughts ‘recorded’ in the vague hope that some kind of future technology will be able to play them back:

“The visual reconstruction is kind of crude right now but the data is definitely there and it will get better. It’s just a matter of refinement,” Marks says. “That information is stored – once you’ve recorded that information it’s there forever. In the future we’ll be able to reconstruct the data we have now much better.”

No. N. O. No. The data is absolutely, categorically not there. Standard fMRI scans these days record using a resolution of 2-3mm. A cubic volume of brain tissue 2-3mm on each side probably contains several hundred thousand neurons, each of which may be responding to different stimuli, or involved in different processes. fMRI is therefore a very, very blunt tool, in terms of capturing the fine detail of what’s going on. It’s like trying to take apart a swiss watch mechanism when the only tool you have is a giant pillow, and you’re wearing boxing gloves. A further complication is that we still have so much to learn about exactly how and where memories are actually represented and stored in the brain. To accurately capture memories, thoughts, and even dreams, we’ll have to use a much, much better brain-recording technology. It’s potentially possible someday (and that ‘someday’ might even be relatively close), but the technology simply hasn’t been invented yet.

So, the idea that you can read someone’s mind in a single session, and preserve their treasured memories on a computer hard disk for future playback is simply hogwash right now. I’m as excited by the possibilities in this area as the next geek, but it’s just not possible right now. Dr Marks is charging people $2000 a pop for a pretty useless service, no matter how optimistic he might be about some mythical kind of future mind-reading playback device.

NB. I’ve got a lot more to say about MMT’s other services too, but this post’s got a bit out of hand already, so I’ll save that for a future one…

The NeuroBusiness 2015 Conference

“I very much welcome the opportunity to bring neuroscientists together with business.”

This is a noble aim, but apparently no-one let the conference organisers know about it, as browsing the list of speakers quickly reveals that there were no neuroscientists invited. None. The closest we have is Dr Jenny Brockis who appears to be a medic who found a more lucrative calling in Brain-fitness-related motivational speaking (*yawn*) and Dr Paul Brown, a clinical psychologist who has…. let’s say, an ‘interesting’ background, with various academic appointments in South-East Asia. Dr Brown is also the author of the book ‘Neuropsychology for Coaches’, the title of which suggests he doesn’t really know what the term ‘neuropsychology‘ refers to. Unless of course it’s a book for American Football coaches who have to deal with regular traumatic brain injuries in their players, which I doubt.

Anyway, I’ve no idea if the conference was a roaring success or not, since, as a neuroscientist, I wasn’t invited. What I do know is that it turned into an utter debacle on Twitter. Conference attendees started tweeting nonsensical things like:

“Hack your brains dopamine to become addicted to success!”

or

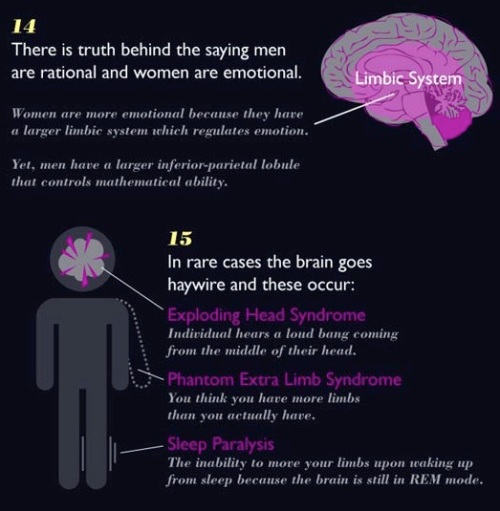

“Men’s brains fire back to front, women’s fire side to side. That’s why women multi task well”

…and the neuroscientists on Twitter quickly and gleefully piled on with sarcasm, jokes and general rubbishing. At one point it became really rather difficult to detect which were genuine #neurobusiness2015 tweets and which were fake sarcastic ones. I did notice there were significantly less tweets from the conference on day 2 – was some announcement made? It was all jolly good fun for us neuroscientists, but I did start to feel a bit sorry for the conference organisers at some point.

However, I have a suggestion. One which would prevent something like this happening again. If any of the conference organisers happen to be reading this, my suggestion for NeuroBusiness 2016 (if it happens) is this:

INVITE SOME NEUROSCIENTISTS. People who actually know something about the brain. Some of us are actually quite engaging speakers, who would relish the opportunity to emerge from our dark basement labs, and spend a day interacting with normal people. We’re not all massive nerds, obsessed with the abstract minutiae of our particular area of research. Well… I mean, we actually are, but that doesn’t mean we can’t function normally as well. Some of us even like to think about how neuroscience can be applied in every-day life too. Just have a look around at people’s CVs and publications, and pick a few good ones. Or have a look on Speakezee, or even just send me an email through this site, and I’ll send you a list of suggestions.

Business people – it’s great that you’re interested in the brain. We get it. We are too, that’s why we do what we do. Unfortunately there are a lot of people out there who have realised that sticking the neuro- prefix on some old load of bollocks is a jolly good whizz-bang way to make loads of money on the motivational speaking circuit. If your computer breaks, you wouldn’t call a motivational speaker, would you? You’d call an IT expert. If you want to know about the brain – ask a neuroscientist.

15 Comments

Posted in Commentary, Products

Tagged #neurobusiness2015, conference, neurobusiness, neuroleadership